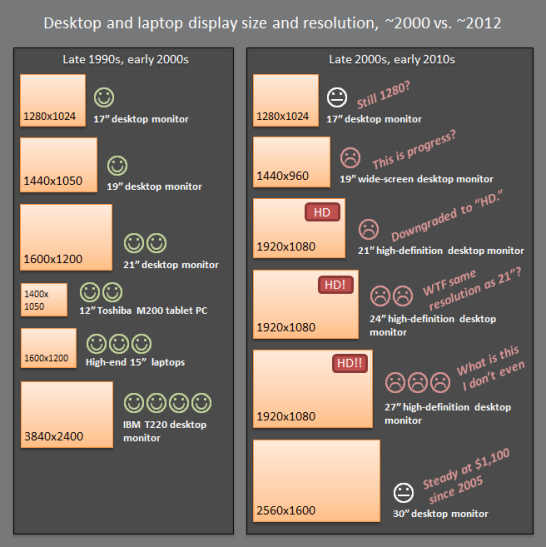

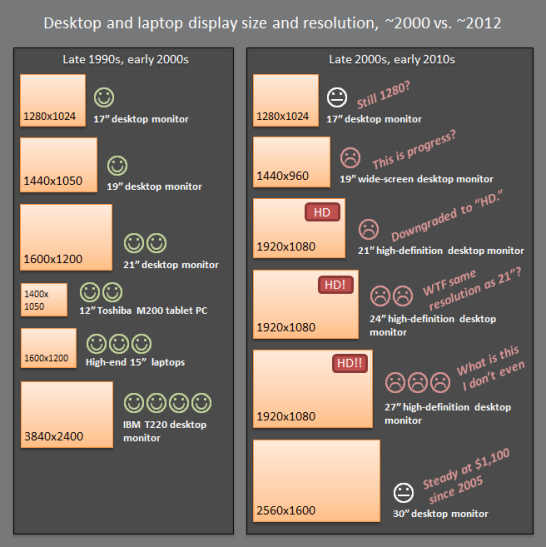

The "HD" moniker has proven massively successful with consumers. Rather than explain why a 27" monitor has the exact same number of pixels as a 21", the manufacturers just tell us it's HD to shut us up. "Yeah, but HD. H ... D!" Adding insult, 1080 is still sometimes called "1080p" to indicate progressive scanning as if computer monitors ever used interlacing (sorry Amiga fans!)HD sucks! It has been a bane of desktop displays for years, and it shocks me that it has such a stranglehold on every manufacturer. Those who do venture beyond HD into the charted-then-uncharted realms of 1200+ lines do so for astonishing price premiums.Instead of giving us resolution, display manufacturers twiddled away years fussing with the living room to bring us 3D glasses. That would have been fine, of course, had it not served as an excuse to pause innovation elsewhere. I still remember thinking OLED was "5 years away" in 2004. Ah, OLED, how I long to see you in my life.HD has neutered the desktop display industry. But that just means the display industry is ripe for the taking. I am optimistic that some firm out there wants to earn my money by innovating on the desktop. Please.

The "HD" moniker has proven massively successful with consumers. Rather than explain why a 27" monitor has the exact same number of pixels as a 21", the manufacturers just tell us it's HD to shut us up. "Yeah, but HD. H ... D!" Adding insult, 1080 is still sometimes called "1080p" to indicate progressive scanning as if computer monitors ever used interlacing (sorry Amiga fans!)HD sucks! It has been a bane of desktop displays for years, and it shocks me that it has such a stranglehold on every manufacturer. Those who do venture beyond HD into the charted-then-uncharted realms of 1200+ lines do so for astonishing price premiums.Instead of giving us resolution, display manufacturers twiddled away years fussing with the living room to bring us 3D glasses. That would have been fine, of course, had it not served as an excuse to pause innovation elsewhere. I still remember thinking OLED was "5 years away" in 2004. Ah, OLED, how I long to see you in my life.HD has neutered the desktop display industry. But that just means the display industry is ripe for the taking. I am optimistic that some firm out there wants to earn my money by innovating on the desktop. Please.2012-12-15

HD sucks

The "HD" moniker has proven massively successful with consumers. Rather than explain why a 27" monitor has the exact same number of pixels as a 21", the manufacturers just tell us it's HD to shut us up. "Yeah, but HD. H ... D!" Adding insult, 1080 is still sometimes called "1080p" to indicate progressive scanning as if computer monitors ever used interlacing (sorry Amiga fans!)HD sucks! It has been a bane of desktop displays for years, and it shocks me that it has such a stranglehold on every manufacturer. Those who do venture beyond HD into the charted-then-uncharted realms of 1200+ lines do so for astonishing price premiums.Instead of giving us resolution, display manufacturers twiddled away years fussing with the living room to bring us 3D glasses. That would have been fine, of course, had it not served as an excuse to pause innovation elsewhere. I still remember thinking OLED was "5 years away" in 2004. Ah, OLED, how I long to see you in my life.HD has neutered the desktop display industry. But that just means the display industry is ripe for the taking. I am optimistic that some firm out there wants to earn my money by innovating on the desktop. Please.

The "HD" moniker has proven massively successful with consumers. Rather than explain why a 27" monitor has the exact same number of pixels as a 21", the manufacturers just tell us it's HD to shut us up. "Yeah, but HD. H ... D!" Adding insult, 1080 is still sometimes called "1080p" to indicate progressive scanning as if computer monitors ever used interlacing (sorry Amiga fans!)HD sucks! It has been a bane of desktop displays for years, and it shocks me that it has such a stranglehold on every manufacturer. Those who do venture beyond HD into the charted-then-uncharted realms of 1200+ lines do so for astonishing price premiums.Instead of giving us resolution, display manufacturers twiddled away years fussing with the living room to bring us 3D glasses. That would have been fine, of course, had it not served as an excuse to pause innovation elsewhere. I still remember thinking OLED was "5 years away" in 2004. Ah, OLED, how I long to see you in my life.HD has neutered the desktop display industry. But that just means the display industry is ripe for the taking. I am optimistic that some firm out there wants to earn my money by innovating on the desktop. Please.About this blog